Extract-Transform-Load (ETL)

Extract-Transform-Load (ETL)

End-to-End data extraction and transformation pipelines, loading data for multiple services’ consumption, scheduling systems for periodic jobs.

Extract-Transform-Load (ETL)

End-to-End data extraction and transformation pipelines, loading data for multiple services’ consumption, scheduling systems for periodic jobs.

ETL Functionality

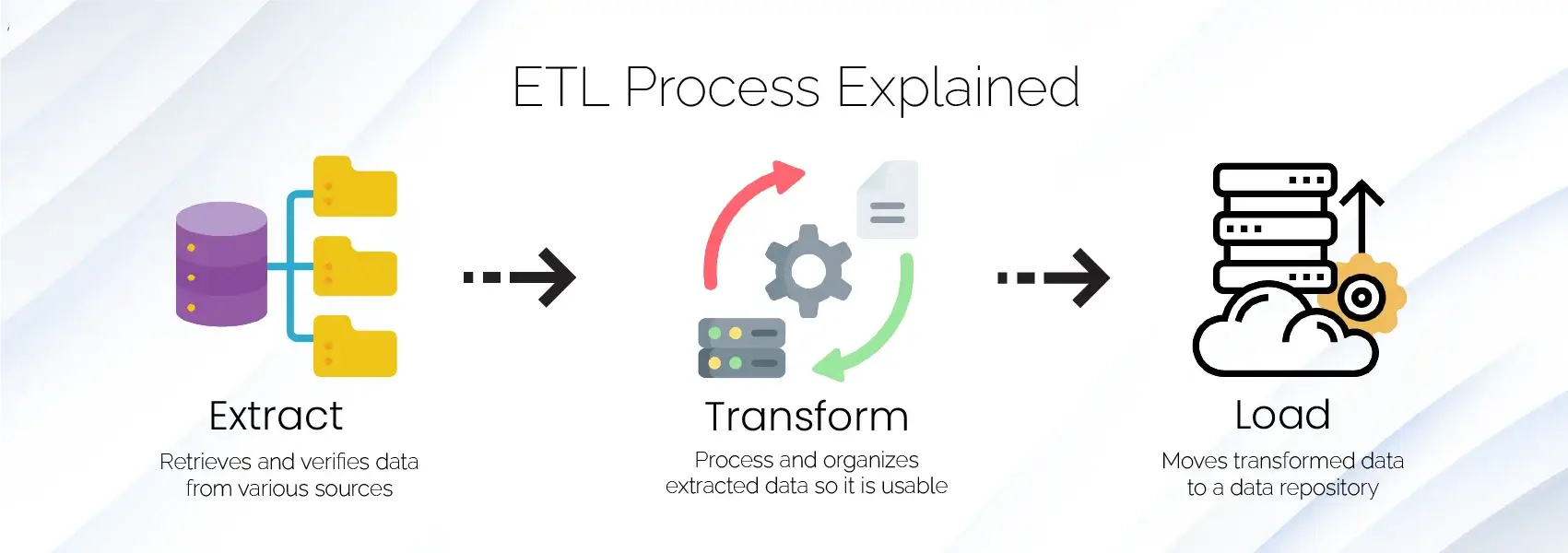

Extract

The very first step is to extract data from sources that are heterogeneous in nature such as APIs, sensor data, transaction databases, marketing tools, and business systems. Understandably so, some of these data types are just structured outputs of largely used systems, while others are JSON server logs that are semi-structured. There are three main ways to extract data: partial extraction, partial extraction with notification update, and full extraction for any Data Engineering services and Data Engineering Solutions.

Transform

The second step requires data transformation of the extracted data into a format that is made usable for several applications. In this step, data gets transformed, mapped, and cleansed to a special schema in order to meet operational needs. This process uses several types of transformation that ensure the integrity and quality of data. Data is not generally loaded directly into the source but it rather opts for a staging database to be loaded into.

Load

The load step is basically writing converted data to a target database from a staging area. Looking into an application’s requirements, the process varies and either can be simple or complex. All of these extract transform load steps can be done with custom code or by any of the extract transform load tool.

An ETL Pipeline

The mechanism that allows ETL the occurrence of ETL processes is an ETL pipeline. Datapipelines are a bunch of activities and tools for shifting data from one system with its technique of storing data and processing to another system. Furthermore, pipelines give the chance for getting information automatically from many different sources, then consolidate and transform it into a high-performing data storage and that is exactly how extract transform load (ETL) is achieved.

Having read all of the above, you can fairly assume that ETL is like a vital organ for any Data Engineering body.